Hur vi byggde en serverlös realtidschatt på AWS (med APIGateway och NodeJS)

Sockets är ökända för att vara svåra att skala på grund av deras tillståndsberoende natur. Att distribuera dem på ett horisontellt skalbart sätt är därför ingen lätt uppgift.

Vad är WebSockets?

WebSockets är en teknik som möjliggör realtids, tvåvägskommunikation mellan en klient och en server över en enda, långvarig anslutning. Till skillnad från traditionella HTTP-anslutningar, som är tillståndslösa och kräver en ny anslutning för varje förfrågan, möjliggör WebSockets en bestående, dubbelriktad dataöverföring mellan klient och server.

Denna teknik har blivit alltmer populär för att bygga moderna webbapplikationer som kräver realtidsuppdateringar, såsom chattapplikationer, onlinespel och samarbetsverktyg för dokumentredigering. Med WebSockets kan utvecklare bygga mycket interaktiva och dynamiska webbapplikationer som ger en sömlös användarupplevelse.

WebSockets stöds av alla stora webbläsare och kan implementeras med en mängd olika programmeringsspråk, inklusive JavaScript, Python och Ruby. WebSocket-protokollet är utformat för att vara effektivt och lättviktigt, vilket gör det till en idealisk lösning för låg-latens kommunikation mellan klienter och servrar. Sammanfattningsvis erbjuder WebSockets ett kraftfullt och flexibelt verktyg för att bygga moderna webbapplikationer som kräver realtidsdatautbyte.

Problemet med horisontell skalning

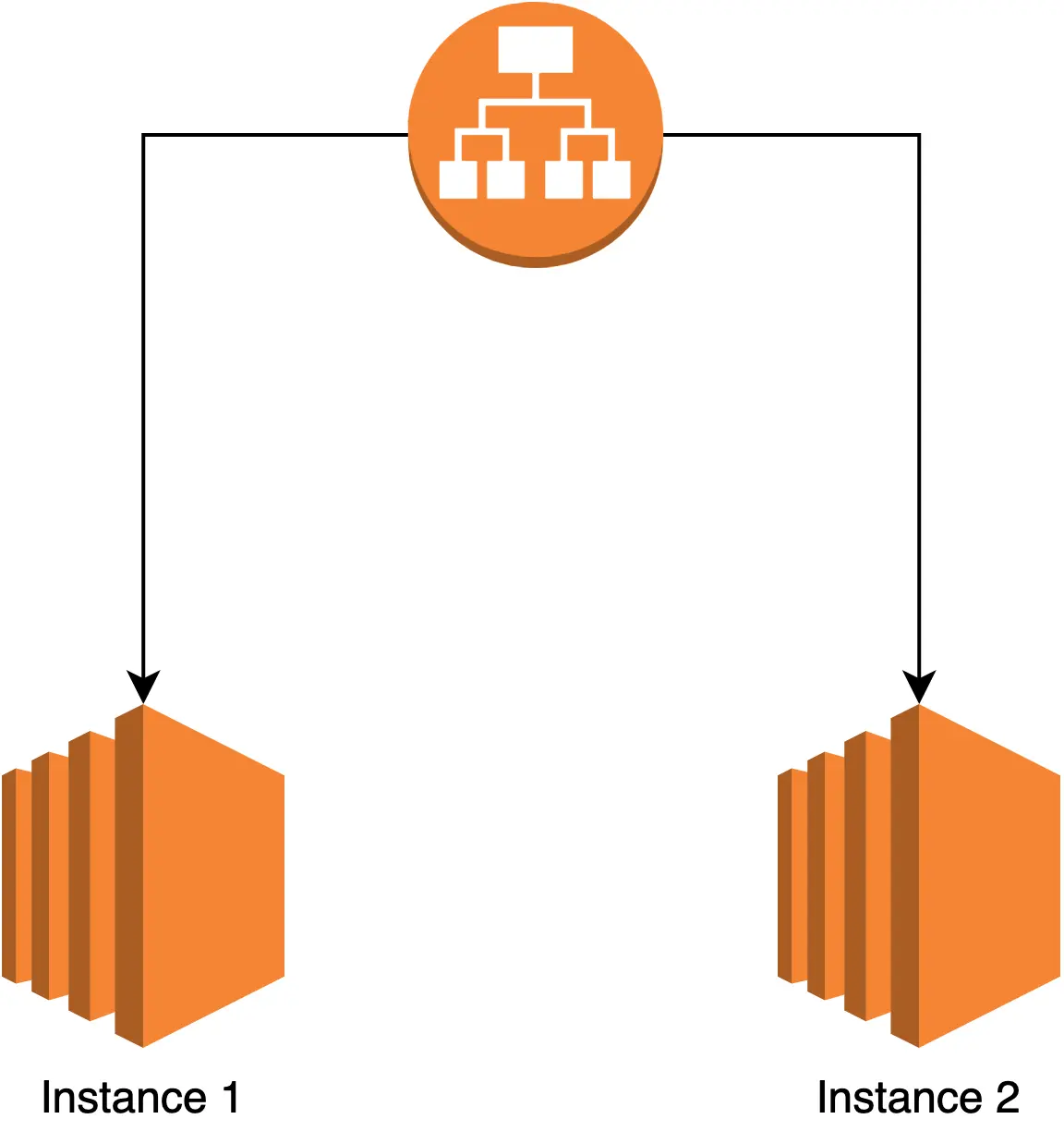

Föreställ dig nu att du försöker skala dem horisontellt med hjälp av en ALB (application load balancer). Varje ny anslutning kommer att slumpmässigt tilldelas en av instanserna bakom ALB.

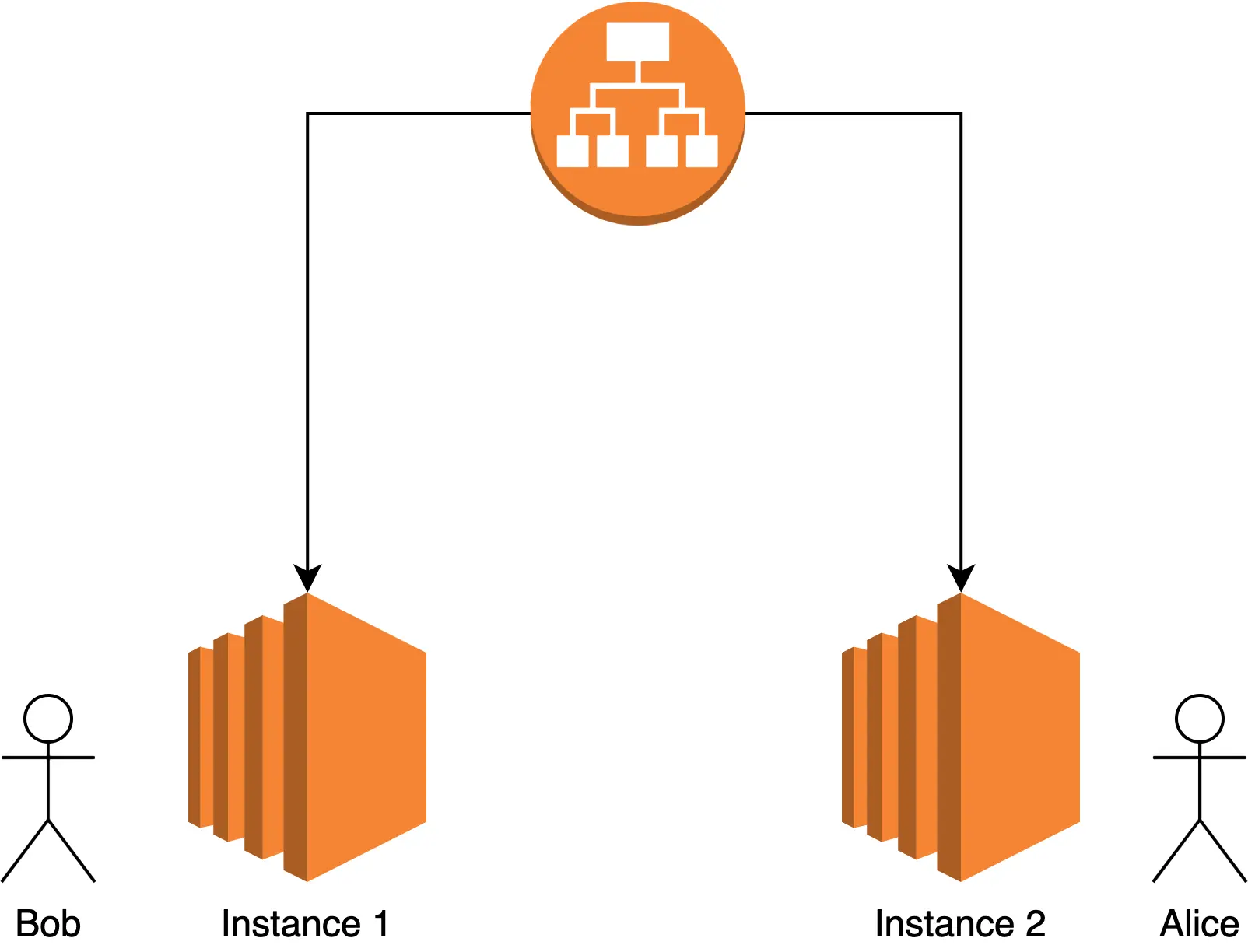

Tänk dig att Bob ansluter till tjänsten och vill skicka ett realtidsmeddelande till Alice. När Bob ansluter kommer han att ställa in en socket-anslutning till "Instance 1" och när Alice ansluter kommer hon att ställa in en socket-anslutning till "Instance 2".

Detta leder dock till ett problem eftersom "Instance 1" inte har någon kunskap om vilka användare som finns på andra maskiner. Därför har instansen inget sätt att sprida informationen på ett skalbart sätt. I teorin skulle instansen kunna göra en synkron POST-förfrågan till alla andra maskiner, men det skulle inte skala eftersom varje meddelande skulle behöva bearbetas av varje maskin, vilket gör skalning omöjlig.

En vanlig metod är att synkronisera alla meddelanden med någon typ av ämne i ett PubSub-mönster. Socket.IO stödjer detta naturligt med hjälp av ett färdigt paket för Redis. Denna metod löser problemet, men det kräver en ElasticCache Cluster och gör inte lösningen serverlös. Ett annat problem som uppstår är att använda Redis enbart för pub/sub kanske inte är den bästa idén eftersom Redis är en allmän databas som stöder en mängd olika datastrukturer och operationer. Det kan användas för caching, meddelandehantering och andra användningsområden, förutom pub/sub. Medan Redis är ett utmärkt val för pub/sub när det används som en del av en större applikation, kanske det inte är det bästa valet för en fristående pub/sub-tjänst, särskilt om tjänsten kräver hög skalbarhet och tillgänglighet. I sådana fall kan det vara bättre att använda en dedikerad pub/sub-tjänst som AWS SNS eller Google Cloud Pub/Sub.

Serverless API Gateway till räddningen

För att mer effektivt lösa det ovannämnda problemet kan vi använda API Gateway med WebSocket-stöd för att hantera socket-anslutningar. Med denna lösning, när en användare ansluter till vår WebSocket-tjänst, kan vi lagra anslutningsinformationen i en DynamoDB-tabell. Denna information kan inkludera anslutnings-ID, som är en unik identifierare för anslutningen, och annan relevant metadata, såsom användar-ID eller sessions-ID.

När anslutningsinformationen är lagrad i DynamoDB kan vi använda den för att skicka meddelanden till specifika anslutningar, eller sända meddelanden till alla anslutna klienter. För att uppnå detta kan vi använda en Lambda-funktion som backend för vår WebSocket-tjänst. Denna funktion kan ta emot meddelanden från klienten, bearbeta dem vid behov och skicka svar tillbaka till klienten. Genom att använda API Gateway och DynamoDB i kombination med en serverlös backend kan vi skapa en skalbar och mycket tillgänglig WebSocket-tjänst som kan hantera ett stort antal samtidiga anslutningar.

Låt oss återbesöka vårt diagram. Istället för att varje instans hanterar socket-anslutningen använder vi API Gateway som har socket-stöd. När en användare ansluter till vår socket lagrar vi anslutningen i en DynamoDB-tabell.

Istället för att varje tjänst hanterar sin egen socket-lösning, flyttar vi istället all realtidslogik till en separat realtidsmikrotjänst. Ett sätt att använda en SQS för att trigga en Lambda-funktion som skickar meddelanden till anslutna användares sockets är att ställa in en kö som tar emot meddelanden från andra delar av applikationen. När ett meddelande tas emot i kön kan det trigga en Lambda-funktion att bearbeta meddelandet och skicka rätt svar till de anslutna användarna.

Till exempel, föreställ dig en chattapplikation där användare kan skicka meddelanden till varandra. När en användare skickar ett meddelande läggs det till i en SQS-kö. En Lambda-funktion triggas av SQS-meddelandet som bearbetar meddelandet och bestämmer vilka användare som ska få meddelandet. Lambda-funktionen skickar meddelandet till de berörda användarnas sockets, med hjälp av informationen om anslutningen som lagras i DynamoDB.

Denna metod möjliggör en skalbar och avkopplad arkitektur, där olika delar av applikationen kan skicka meddelanden till kön utan att behöva veta detaljerna om hur meddelandena bearbetas och skickas till användarna. Dessutom, när man använder en serverlös arkitektur med Lambda och SQS, kan systemet skala automatiskt för att hantera stora mängder meddelanden och användare, samtidigt som man endast betalar för det som används.